Author:

Peter Collison

We seem to be at a pivotal point in the adoption of e-assessment in our schools, colleges and universities, in which the interest in and the pace of change is accelerating. This has no doubt been spurred on by countries including New Zealand and Egypt making bold moves to full digital assessment in national high stakes summative exams, with a number of European countries looking to follow suit.

There is also a growing recognition amongst educators of the potential for digital assessment to add value to the learning journey itself. Formative assessment, often referred to as ‘assessment for learning’ as opposed to ‘assessment of learning’, is one area where schools, colleges and universities have the ability and autonomy to use an increasing number of digital tools to improve their assessment and feedback processes to improve learning and attainment.

Recent reports, from Jisc and Qualifications Wales respectively, both suggest that an end to pen and paper exams in the UK could be in sight by the middle of this decade. This transition will undoubtedly be met with multiple practical barriers to overcome. However, there is no reason why schools and colleges have to wait for such a big transition to be done to them when digitising formative assessment is within their control.

In addition to the myriad of benefits digitising formative assessment can bring to teachers, assessors and educational leaders – from reducing time spent on marking, moderation and feedback to the ability to more easily derive insights from learner analytics – the benefits to learners are also clear. Digital assessment can help learners better understand their strengths and development areas and provide a more authentic assessment experience in their normal way of working.

So, which areas of formative assessment are ripe for digitisation?

Feedback first

Rather than jumping straight in with changing the way formative assessment is conducted, an easy place to start – but one which has a huge impact – is changing the way feedback is given and acted on. Using technology to make the feedback in formative assessment more meaningful can get everyone more comfortable using digital tools as an integral part of the learning journey, and have a positive impact on the way assessment technology is perceived. Establishing this buy-in is critical if more transformative technology is to be deployed – we know that confidence in using digital tools is low, particularly amongst teachers.

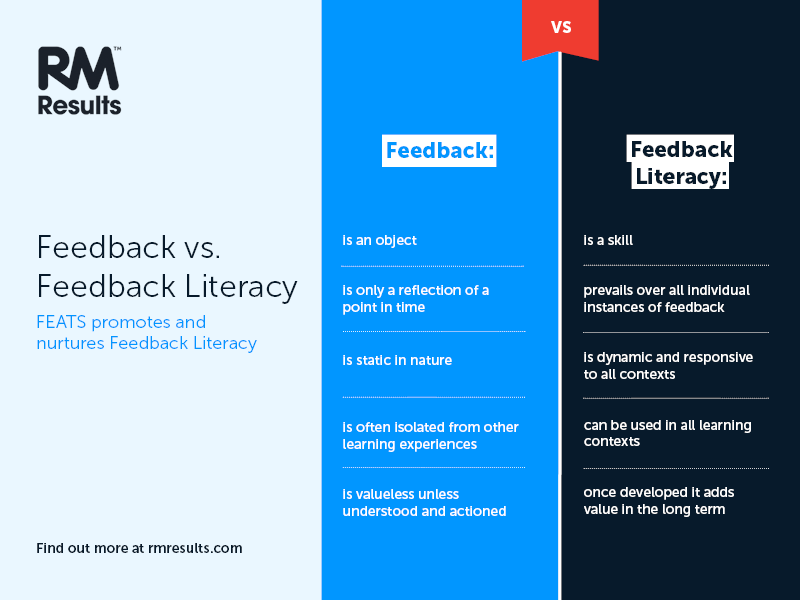

The recent Jisc report into the future of assessment talked a lot about generating feedback, but it didn’t touch much upon how you actually empower students to act on that. Feedback literacy is absolutely critical in learner development and improving attainment, and is something that technology can have a profound effect on. Our Feedback Engagement and Tracking System (FEATS), for example, can be used in FE and HE to enable students to become more self-aware in their learning and to develop that critical skill of feedback literacy.

FEATS provides one central place where students can collate and categorise the feedback they receive during their learning journey across multiple modules. Built as a result of extensive research by the University of Surrey’s Learning Lab, the very process of taking ownership of recording and interpreting their own feedback has been shown to vastly improve the student’s comprehension and literacy in this area. Recurring themes in their feedback can be easily identified with links to learning resources assisting in their development action plan.

Using technology as an enabler to improve learner engagement and attainment can improve the student experience in the area of assessment and feedback, something we know from the National Student Survey (NSS) is an area ripe for improvement in UK higher education.

Assessment automation & design

There are several opportunities for automation in the formative assessment process. One of these is auto-marking. We have worked with multiple awarding organisations to trial the use of AI for marking short, handwritten exam responses. Whilst the trial focused on answers one-to-three words in length, there is no reason why the technology couldn’t be applied to longer sentences where appropriate. This should free up examiners and teachers to focus on the most nuanced or subjective assessment responses, or quality checking instances where there is less confidence in the reliability of the auto-mark.

We were pleased to see that Jisc also recognised the role that Adaptive Comparative Judgement (ACJ) should play in the future of assessment. Our RM Compare software, which is underpinned by ACJ, has been used at all levels of education, from primary to HE, with considerable success.

RM Compare automates the comparison process, asking teams of markers or ‘judges’ to individually compare pieces of work side by side. After multiple comparison rounds, it then starts to select pairs for one marker to compare that have already been deemed by others to be a similar standard. This helps them to more closely arrive at an accurate ranking of work. RM Compare removes the need for traditional moderation methods, making the overall assessment process faster and more reliable. When used for peer-to-peer judging in formative assessment, it has also been shown to improve attainment of learners, as a recent trial by Purdue University demonstrates > Download case study. As they are exposed to more work and asked to make comparisons, it encourages learners to really consider what makes one piece of work better than another.

While there are lots of opportunities for technology to change the way marking is conducted, we also need to give much more consideration to what the assessment itself should actually look like. Re-designing formative assessment is a really good opportunity to think about what learners might respond better to, and to see what assessment might look like in future if summative was to change.

We need more real-world assessment contexts, using tools that learners are already totally familiar with, ultimately helping to create a testing environment that they are more comfortable with. Tools like RM’s Assessment Master have been developed to help with this kind of delivery. Able to incorporate interactive and collaborative elements and live problem-solving, web-based exams are arguably a better way of assessing certain skills than a simple written task. In turn, this can give teachers a much better understanding of where a learner actually sits in their development, and what skills they might need to work on.

Digitising the formative assessment process presents a fantastic opportunity for schools to add real value to the learning experience for everyone, without having to wait for any national agenda to be implemented. We believe that some of the technology outlined in this article can help make a potential transition to full digital assessment quicker, smoother, and more effective.